Introduction

Artificial Intelligence (AI) is no longer just a futuristic concept; it’s an integral part of our daily lives, from personalised recommendations on streaming services to complex decision-making systems in healthcare and finance.

As AI continues to evolve and permeate every aspect of society, the importance of ethical AI development has become a hot topic.

Ethics in AI aren’t just about following rules; they’re about ensuring that the technology we create aligns with our values and societal norms. As AI systems take on more responsibilities, from diagnosing diseases to determining loan eligibility, the potential for harm grows if they aren’t developed responsibly.

This blog dives into what it means to create ethical AI, the real-world consequences of unethical AI, the challenges developers face, and how we can overcome them. By understanding and addressing these challenges, we can build AI that benefits everyone, not just a select few.

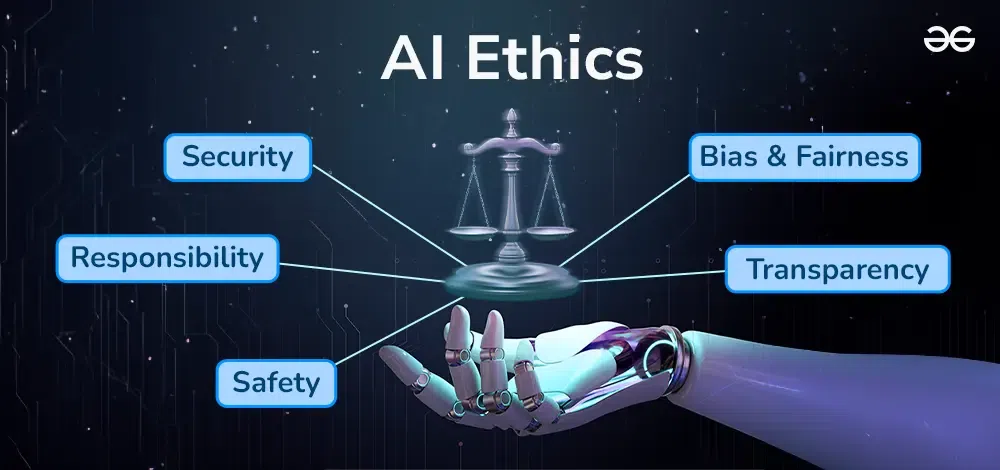

1. Understanding Ethical AI: What Does It Mean?

Ethical AI refers to developing and deploying AI systems that operate within the boundaries of moral and ethical guidelines. It’s about ensuring that AI behaves in ways that are consistent with societal values, respects human rights, and minimises harm.

Source: Jaro Education

While general AI development focuses on creating intelligent systems, ethical AI goes a step further by embedding moral considerations into the design and deployment of these systems.

This difference is crucial. For developers, it means considering the broader implications of their work—beyond just technical performance. For users, it’s about trusting that the AI they interact with is designed in their best interests.

Take, for example, facial recognition technology. While it can be beneficial for security purposes, it has also been criticised for its potential to infringe on privacy rights and its tendency to be biased against certain racial groups.

In one notable case, a study by MIT Media Lab found that commercial AI systems were less accurate in identifying darker-skinned individuals, particularly women. This is a clear example of how ethics in AI differs from general AI development—without ethical considerations, these systems can cause more harm than good.

2. The Real-Life Impact of Unethical AI

The consequences of unethical AI are not hypothetical; they now affect real people. One of the most discussed examples is biased algorithms in criminal justice. In the United States, AI systems have been used to assess the likelihood of a defendant reoffending, a process known as risk assessment.

However, studies have shown that these systems often have a racial bias, disproportionately labelling Black defendants as high-risk compared to their white counterparts. This perpetuates existing inequalities and undermines trust in the justice system.

Another example is the use of AI in hiring processes. Some companies have adopted AI to screen job applicants, aiming to streamline recruitment. However, these systems have been found to favour specific demographics over others inadvertently.

For instance, an AI system used by a major tech company was found to be biased against female applicants because it was trained on resumes submitted predominantly by men, reflecting the company’s existing workforce.

The impact of such biases can be profound, leading to lost opportunities, discrimination, and a reinforcement of social inequalities. These examples underscore the importance of developing AI and doing so in a fair, transparent, and accountable way.

Learning from these mistakes is crucial to avoid repeating them and ensure that AI serves all of humanity, not just a privileged few.

3. Key Challenges in Developing Ethical AI

Developing ethical AI is fraught with challenges, many of which stem from the inherent complexities of both AI technology and human society. Below are some of the most pressing issues:

Bias in Data and Algorithms: One of the most significant challenges in ethical AI development is addressing bias. AI systems are only as good as the data they are trained on, and if that data is biased, the AI will be too. Bias can enter the system in various ways—through historical data that reflects past discrimination, biased data collection processes, or even through the subjective choices of the developers themselves.

For example, if an AI system is trained primarily on data from a specific demographic, it may perform poorly for others. This challenge is technical and ethical, as biased AI can lead to unfair outcomes.

Transparency and Accountability: AI systems often operate as “black boxes,” meaning their decision-making processes are not transparent or understandable even to their creators. This lack of transparency makes it difficult to hold anyone accountable when things go wrong.

For instance, if an AI system denies a loan application, the applicant may not know why or have any recourse to challenge the decision. Ensuring transparency and accountability in AI is challenging because it requires balancing technical feasibility with the need to protect proprietary algorithms and data.

Privacy Concerns: AI systems often require vast amounts of data to function effectively, raising significant privacy concerns. For example, AI-driven health applications collect sensitive personal data to provide accurate recommendations.

However, this data can be misused or hacked without proper safeguards, leading to severe privacy violations. The challenge lies in developing AI that respects privacy while still being effective. Striking this balance is particularly difficult when data is considered a valuable asset.

Autonomy and Control: As AI systems become more autonomous, there is a growing fear that they could act independently of human control, leading to unintended consequences. For example, an AI system used in military applications could make life-and-death decisions without human intervention, raising ethical questions about responsibility and control.

The challenge is ensuring that AI remains under human oversight while benefiting from its autonomous capabilities.

4. Strategies for Overcoming Ethical Challenges in AI Development

Despite the challenges, there are strategies that developers and organisations can adopt to create more ethical AI systems:

Diverse and Inclusive Teams: One of the most effective ways to minimise bias in AI is to have diverse teams working on its development. Diversity in gender, race, ethnicity, and background brings different perspectives, which can help identify and address potential biases that might otherwise go unnoticed. Companies like Google and Microsoft have recognised this and are trying to build more inclusive teams.

By ensuring that a variety of viewpoints are considered during the development process, these companies are better equipped to create AI systems that are fairer and more representative.

Rigorous Testing and Validation: Before deploying AI systems, it’s crucial to conduct thorough testing to identify and fix ethical issues. This includes stress-testing the system with various scenarios to ensure it performs consistently across different groups and situations.

Regular audits and validation processes should be part of this approach, helping to catch any biases or errors that could lead to unethical outcomes. For example, IBM has developed a comprehensive AI fairness toolkit that helps developers test their models for bias before deployment.

Clear Ethical Guidelines: Creating and adhering to ethical guidelines is essential for responsible AI development. These guidelines should outline the principles that guide AI design and deployment, such as fairness, transparency, and accountability.

Organisations like the IEEE and the European Union have developed frameworks that provide a foundation for ethical AI practices. By following such guidelines, developers can ensure that their AI systems align with societal values and legal requirements.

Ongoing Monitoring and Adjustment: Ethical AI development doesn’t stop once the system is deployed; it requires ongoing monitoring and adjustment. This means continuously evaluating the AI’s performance, looking for any signs of bias or unethical behaviour, and making necessary adjustments.

For instance, Google regularly updates its AI systems to ensure they remain fair and effective over time. This proactive approach helps to prevent issues before they become significant problems, ensuring that the AI remains aligned with ethical standards.

5. The Role of Policymakers and Organizations in Ethical AI

While developers play a critical role in ethical AI, policymakers and organisations also have significant responsibilities. Government policies and regulations can provide a framework for ethical AI development by setting minimum standards and enforcing compliance.

For example, the European Union’s General Data Protection Regulation (GDPR) has set strict rules on data privacy, impacting how AI systems collect and use personal data. These regulations help protect individuals’ rights and ensure that AI is used responsibly.

On the other hand, organisations can set industry standards beyond legal requirements. By adopting best practices and promoting transparency, organisations can lead ethical AI development.

For instance, the Partnership on AI, a coalition of tech companies, researchers, and civil society organisations, has established guidelines for the responsible development and deployment of AI. These guidelines encourage companies to prioritise ethics in their AI strategies, fostering a culture of responsibility and accountability.

Existing frameworks like the IEEE’s Ethically Aligned Design provide a robust foundation for ethical AI practices. These frameworks offer developers and organisations a roadmap for integrating ethical considerations into their AI systems, ensuring they operate in ways consistent with human values.

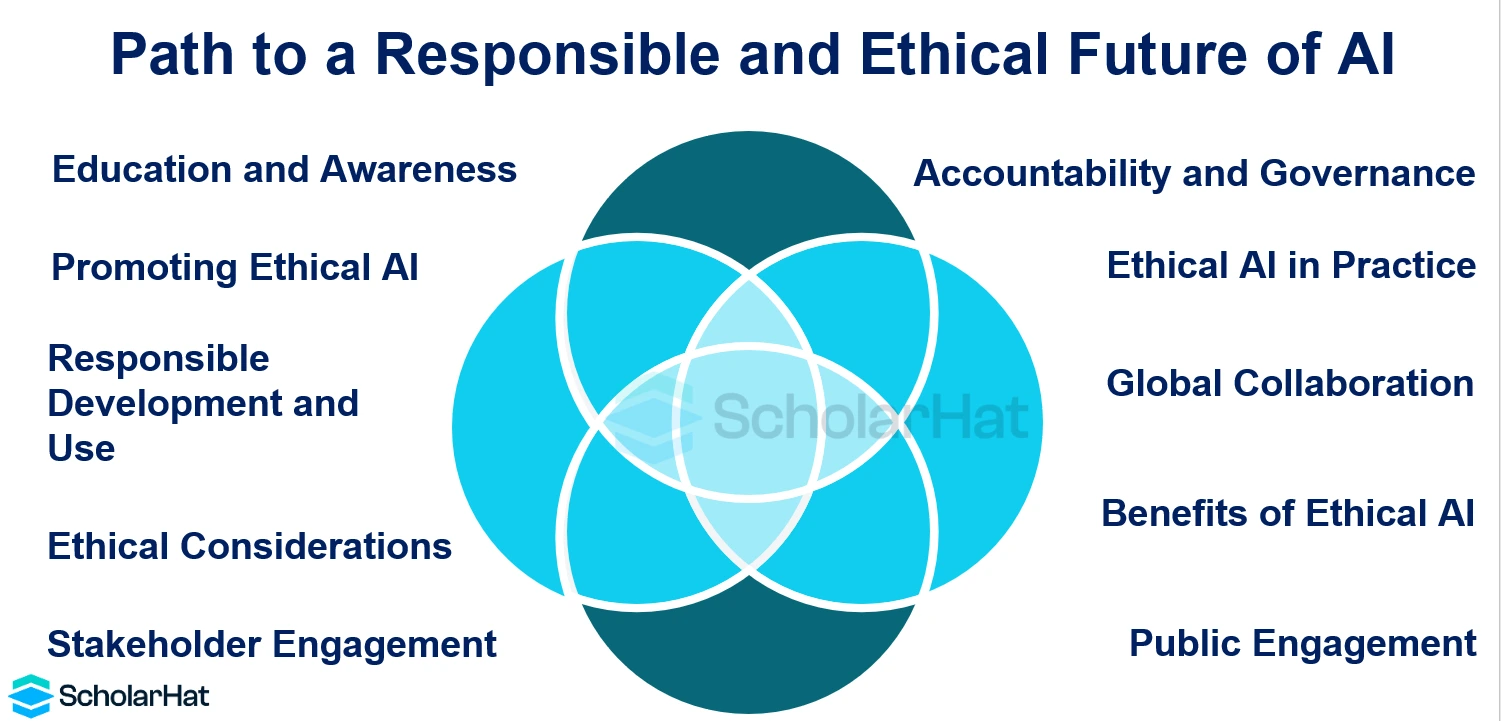

6. The Future of Ethical AI: What’s Next?

As AI technology continues to advance, the challenges of ethical AI development will evolve. We may see new issues emerge, such as the ethics of AI in deep learning systems that can generate highly realistic fake content (deepfakes) or the use of AI in creating autonomous weapons. These future challenges will require innovative solutions and a commitment to continuous learning and adaptation.

Source: ScholarHat

The future of ethical AI will likely involve more robust collaboration between developers, policymakers, and civil society. As AI becomes more integrated into our lives, the need for broad-based discussions about its ethical implications will only grow.

Public awareness and engagement will be crucial in shaping the direction of AI development, ensuring that it remains aligned with societal values.

To stay ahead of these challenges, it’s crucial for all stakeholders—developers, businesses, governments, and individuals—to remain informed and involved in the conversation about ethical AI. By doing so, we can help guide AI’s development in a way that maximises its benefits while minimising its risks.

Conclusion

Ethical AI is not just a technical challenge; it’s a moral imperative. As AI systems become more powerful and pervasive, ensuring they operate within ethical boundaries becomes increasingly essential. By understanding the challenges and actively working to overcome them, we can create AI that serves all of humanity fairly and responsibly.

As we continue to innovate and push the boundaries of what AI can do, keeping ethical considerations at the forefront of development is crucial. Whether you’re a developer, a business leader, or a concerned citizen, the ethical implications of AI should be a key consideration in your work and daily life.

Let’s all participate in the ongoing conversation about ethical AI development. By doing so, we can help ensure that the AI systems of the future are not only intelligent but also just and equitable for everyone.