Introduction

Artificial intelligence (AI) is transforming our world at a lightning pace. From chatbots that assist us with customer service to algorithms that predict health outcomes, AI is woven into the fabric of our daily lives. However, with this rapid advancement comes a significant concern: the balance between innovation and privacy.

As businesses and developers rush to leverage AI’s capabilities, the ethical implications of data use are more critical than ever. In a recent survey by the Pew Research Center, 80% of Americans expressed concern over how their personal information is used by tech companies (Pew Research, 2023). This raises an important question: how can we foster innovation while ensuring privacy is respected and upheld?

This blog will explore the intricate dance between AI implementation and ethical considerations. We’ll delve into AI’s benefits, the privacy challenges it presents, the ethical frameworks guiding its development, and practical strategies for balancing these often conflicting interests. By the end, we hope to provide you with insights on how we can innovate responsibly and ethically in a technology-driven world.

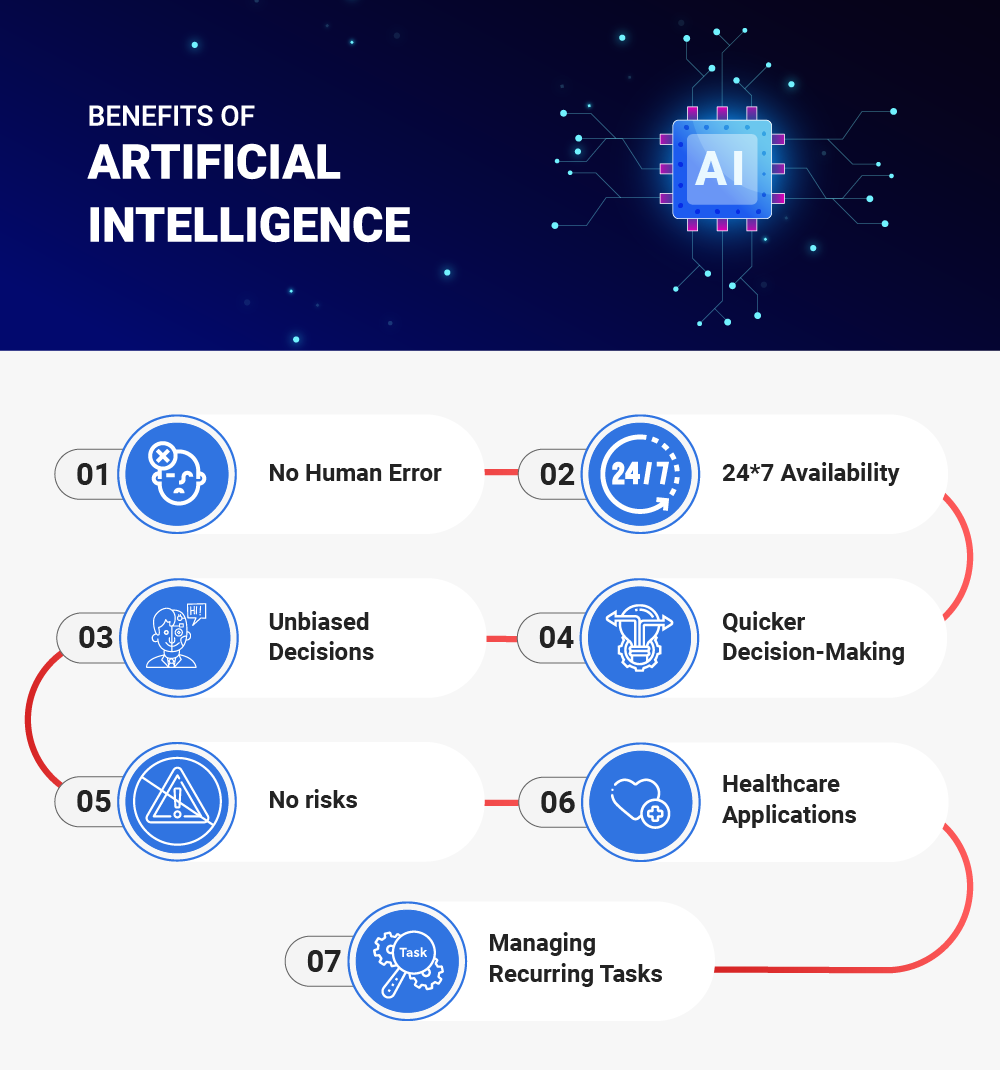

Understanding AI and Its Benefits

Artificial intelligence refers to machines and software that mimic human intelligence and perform tasks that typically require human thought, such as problem-solving, learning, and adaptation. The applications of AI are vast, ranging from healthcare to finance and even in our daily lives through smart home devices and virtual assistants.

Source: HData Systems

In healthcare, for example, AI algorithms analyse vast amounts of data to provide more accurate diagnoses, recommend personalised treatments, and even predict patient outcomes. According to a report from Accenture, AI applications in healthcare could create $150 billion in annual savings for the U.S. healthcare system by 2026 (Accenture, 2023). This highlights AI’s potential to drive efficiency and improve patient care, ultimately enhancing the quality of life for many individuals.

Businesses are also leveraging AI to enhance decision-making processes and streamline operations. Companies use AI to analyse consumer behaviour, optimise supply chains, and predict market trends. For instance, a McKinsey study revealed that companies implementing AI could boost their cash flow by 25% by 2030 (McKinsey, 2024). This competitive edge is crucial in today’s fast-paced business environment.

Moreover, AI enhances user experience. Personalised recommendations on platforms like Netflix and Amazon keep users engaged and satisfied. For example, personalisation algorithms can increase sales by up to 10% in the retail sector (Bain & Company, 2023). As we marvel at these innovations, it’s essential to consider the ethical implications of how AI operates—especially concerning privacy.

Privacy Concerns in AI Implementation

As we embrace AI’s benefits, addressing the privacy concerns that arise with its implementation is vital. One significant issue is the sheer volume of data AI systems collect and process. These systems often use vast datasets, including personal information, to function effectively. For instance, AI algorithms might analyse social media activity, purchase history, and even biometric data to generate insights.

This extensive data collection raises serious privacy concerns. High-profile data breaches have become all too common, compromising the personal information of millions. In 2023, for example, a breach at a major healthcare provider affected over 4 million individuals, exposing sensitive patient information (Healthcare IT News, 2023). Such incidents highlight the potential risks involved in mishandling personal data.

Another critical aspect is user consent and transparency. Many users must be aware of how their data is collected, used, and stored. According to a 2023 survey by the Data Protection Commission, 62% of respondents expressed concerns about the transparency of data collection practices in AI applications (DPC, 2023). Users may feel uneasy about the systems designed to serve them without clear communication.

Moreover, ethical concerns arise when considering how AI systems can inadvertently perpetuate biases in their training data. If the datasets used are biased, the AI models trained on them can lead to unfair outcomes. For instance, a study by ProPublica found that an AI algorithm used in the criminal justice system disproportionately flagged African American defendants as higher risk, illustrating the real-world consequences of biased AI (ProPublica, 2023).

As we navigate these challenges, it’s crucial to establish a foundation of trust and accountability in AI practices. This involves fostering transparency and ensuring that users are informed about their data rights and the measures taken to protect their privacy. Businesses prioritising user education can create an environment where consumers feel empowered rather than vulnerable.

Ethical Frameworks in AI Development

To effectively address the privacy concerns surrounding AI, we need robust ethical frameworks guiding its development and implementation. Various ethical theories can help us navigate this complex landscape.

First, let’s consider utilitarianism, which focuses on maximising overall happiness and minimising harm. In the context of AI, this means balancing the benefits of AI innovations against the potential harms associated with privacy breaches. The challenge lies in determining what constitutes “the greatest good.” For example, while AI can enhance healthcare delivery, it should not come at the cost of patient privacy.

Next is deontological ethics, which emphasises duties and rights. This approach argues that individuals have the right to privacy and should not be violated, regardless of AI’s potential benefits. Upholding these rights is crucial in ensuring that AI systems operate ethically and respect individual autonomy.

When it comes to practical principles, several key aspects emerge:

- Fairness: AI systems must be designed to prevent bias and ensure equitable treatment for all individuals. This involves rigorous testing and validation of AI models to identify and mitigate bias.

- Accountability: Developers and organisations must take responsibility for the actions of their AI systems. This includes being transparent about data usage and AI decision-making processes.

- Privacy: Protecting user data must be a top priority. Implementing data minimisation strategies—collecting only the necessary data for AI functionality—can help mitigate risks.

Organisations also play a vital role in shaping AI ethics. Existing regulations like the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA) set data protection and privacy standards. However, self-regulation within the AI industry is equally important. Establishing ethical AI guidelines and best practices can help build trust with users.

An ethical AI framework must also involve continuous dialogue with stakeholders, including ethicists, technologists, and users, to ensure that ethical considerations evolve alongside technological advancements. Integrating these ethical frameworks into AI development allows us to create systems that innovate responsibly while safeguarding user privacy.

Balancing Innovation and Privacy

Finding the right balance between innovation and privacy is not just a challenge; it’s an essential aspect of responsible AI development. Many organisations are already taking steps to achieve this balance, and their experiences offer valuable insights.

Take Apple, for example. The company has made privacy a cornerstone of its brand identity, consistently emphasising that user data is not sold to third parties. Its commitment to privacy is evident in features like App Tracking Transparency, which requires apps to obtain user consent before tracking their data across other apps and websites. This approach builds trust with users and demonstrates that prioritising privacy can coexist with innovation.

Another compelling case is Google, which has implemented AI algorithms prioritising user privacy. Federated Learning technology trains models on-device, meaning data doesn’t leave the user’s device. This method enhances user privacy while still enabling the development of robust AI models.

Organisations can adopt strategies for ethical AI implementation, such as privacy-by-design. This approach means integrating privacy considerations into the entire AI development lifecycle. It ensures that privacy is a primary consideration rather than an afterthought. Regular audits and assessments of AI systems can help identify and mitigate privacy risks, fostering a culture of accountability.

Moreover, engaging stakeholders—including users, ethicists, and technologists—is crucial in the development process. By involving diverse perspectives, organisations can better understand the potential implications of their AI systems and make more informed decisions.

Collaborating with ethical experts can develop innovative AI systems that respect privacy. When organisations prioritise ethical considerations, they create a foundation of trust, ultimately leading to greater user satisfaction and loyalty.

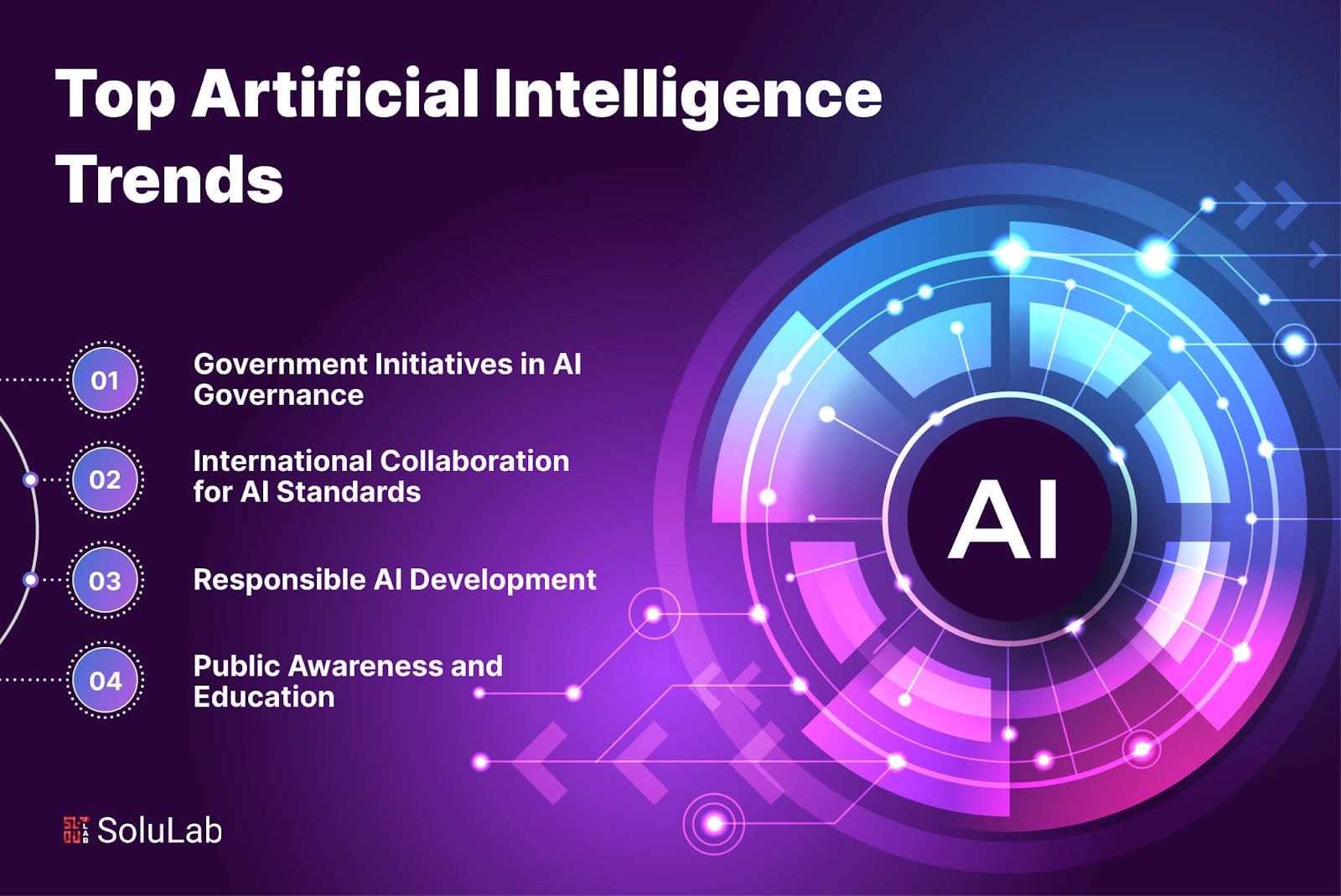

Future Trends in AI and Privacy

As technology evolves, so do the ethical challenges associated with AI and privacy. Emerging technologies offer promising solutions that address some of these concerns.

One such advancement is federated learning, which enables AI models to be trained across multiple devices without compromising user privacy. This method allows organisations to leverage data from various sources while keeping that data localised. As more companies adopt this approach, we may see a shift toward more privacy-conscious AI applications.

Source: Solu Lab

Differential privacy is another exciting development. This technique adds noise to datasets to protect individual privacy while allowing organisations to glean valuable insights. Major companies, including Apple and Google, are implementing differential privacy in their data practices, setting a precedent for responsible data usage in AI. According to a study by MIT, implementing differential privacy can reduce the risk of identifying individuals in datasets by 99% (MIT Technology Review, 2023).

Looking ahead to 2025, the role of AI ethics boards may become more prominent. These boards could oversee AI development, ensuring that ethical considerations are integrated at every stage. They would provide a space for dialogue between developers, ethicists, and users, creating accountability and fostering trust.

Furthermore, we can expect increased regulatory scrutiny on AI implementations. Governments and organisations will likely enhance data privacy and security policies, holding companies accountable for their practices. This evolving landscape will require organisations to stay ahead of compliance measures while continuing to innovate responsibly.

The future of AI will undoubtedly present new challenges and opportunities. Organisations can navigate this landscape responsibly by prioritising privacy and ethics, driving innovation while safeguarding user rights.

Conclusion

In conclusion, the interplay between AI innovation and privacy is complex and crucial. As we have explored, AI has the potential to revolutionise various sectors and offer numerous benefits. However, this potential must be balanced with ethical considerations that respect user privacy.

From understanding the privacy risks associated with data collection to adopting ethical frameworks prioritising fairness and accountability, organisations can lead the way in responsible AI development. Case studies of companies like Apple and Google illustrate that fostering innovation without compromising privacy is possible.

As we move forward, we must advocate for transparency and engage in meaningful discussions about AI’s ethical implications. This involves developers, organisations, and users who deserve to be informed and empowered.

By championing a culture of ethical AI, we can ensure that everyone enjoys the benefits of innovation while safeguarding individual rights. Let’s embrace the future of AI responsibly, where privacy and innovation can coexist harmoniously.